Exploring Next-Generation Processing Architectures

The relentless pursuit of faster and more efficient computing has driven a continuous evolution in processing architectures. As digital demands grow, from artificial intelligence to sophisticated simulations, traditional central processing unit (CPU) designs are being augmented and rethought. This article delves into the innovative approaches and foundational shifts defining the next era of computing hardware, exploring how new designs aim to overcome performance bottlenecks and unlock unprecedented capabilities across various devices and systems.

The Evolution of Processor Design for Enhanced Computing

The foundation of modern computing has long rested on the Von Neumann architecture, where instructions and data share a single memory space. While robust, this model faces inherent limitations, particularly the ‘Von Neumann bottleneck’—the constraint on throughput between the CPU and memory. To address this, current development in processor design emphasizes parallel processing. Multi-core CPUs and Graphics Processing Units (GPUs) are examples of this, with GPUs excelling in highly parallelizable tasks crucial for graphics rendering and machine learning. Further advancements include the emergence of domain-specific architectures (DSAs), tailored to accelerate particular workloads like AI inference or cryptographic operations, moving beyond general-purpose computing to optimize for specific applications. The open-source RISC-V instruction set architecture also offers flexibility for custom hardware development, fostering innovation in specialized processor designs.

Innovations in Memory and Data Handling Systems

Processing power is often bottlenecked by memory access speeds, a challenge known as the ‘memory wall’. Next-generation architectures are tackling this through various innovations. High Bandwidth Memory (HBM) stacks multiple memory dies vertically, significantly increasing data throughput to the processor. Beyond HBM, emerging non-volatile memory technologies like Magnetoresistive RAM (MRAM) and Resistive RAM (ReRAM) promise faster, more energy-efficient, and denser storage closer to the processing units. A significant paradigm shift is processing-in-memory (PIM) or near-memory computing, which aims to reduce the physical distance data travels by embedding computational capabilities directly within or adjacent to memory modules. This minimizes latency and energy consumption associated with data movement, enhancing overall system efficiency and performance for data-intensive applications.

The Role of Specialized Hardware and Software Integration

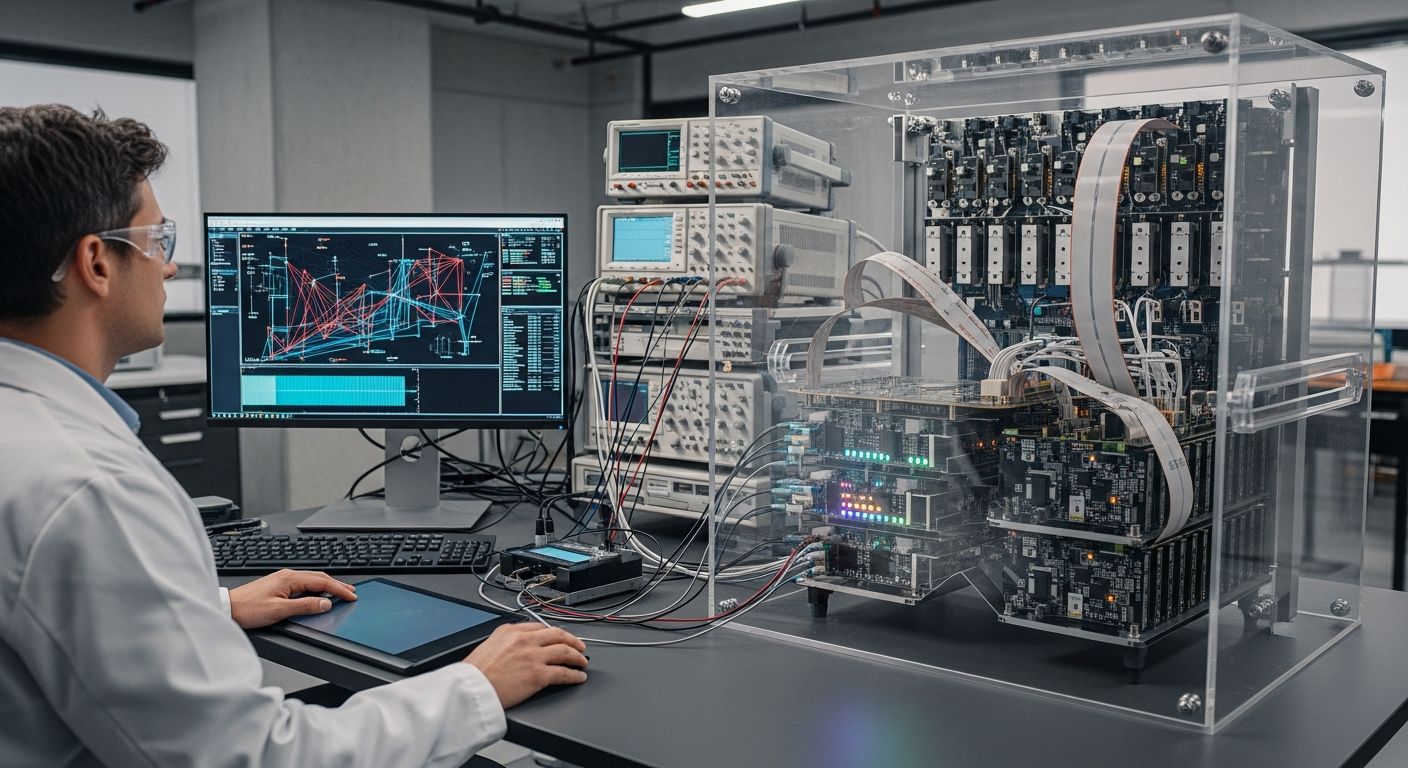

The increasing complexity and specialization of processing architectures necessitate a tighter integration between hardware and software. Specialized hardware components, such as Application-Specific Integrated Circuits (ASICs) and Field-Programmable Gate Arrays (FPGAs), are becoming integral to modern computing systems. These components are designed to perform specific tasks with extreme efficiency, often outperforming general-purpose processors for those particular functions. However, unlocking their full potential requires sophisticated software. This involves developing optimized compilers and programming models that can effectively map complex algorithms onto heterogeneous hardware platforms. The concept of co-design, where hardware and software are developed in tandem, ensures that the underlying architecture is fully utilized, leading to more efficient and powerful digital systems across various applications.

Future Trends in Device and Gadget Development

The advancements in processing architectures are set to profoundly influence the next generation of devices and gadgets. From more powerful and energy-efficient smartphones capable of on-device AI processing to sophisticated Internet of Things (IoT) devices with enhanced local intelligence, these innovations are crucial. The shift towards heterogeneous computing, with its mix of specialized processors, will enable devices to perform complex tasks like real-time augmented reality or advanced sensor fusion with greater efficiency. This development also supports the growth of edge computing, where data processing occurs closer to the source, reducing latency and bandwidth demands on centralized cloud infrastructure. While still in early research phases, quantum computing represents a long-term future direction, promising to tackle problems currently intractable for even the most powerful classical supercomputers.

Advancements in Display Technology and User Interaction

The power of next-generation processing architectures extends significantly to advancements in display technology and user interaction. More robust and efficient processors are essential for driving higher resolution displays, increasing refresh rates, and enabling more complex visual effects in real-time. This is particularly critical for immersive experiences such as virtual reality (VR) and augmented reality (AR), where seamless, low-latency rendering directly impacts user comfort and engagement. Furthermore, these processing capabilities support the development of more intuitive and responsive user interfaces, including advanced gesture recognition, eye-tracking, and natural language processing for voice commands. The integration of high-performance computing with sophisticated display and input technologies creates a more engaging and interactive digital experience across a wide range of devices.

The landscape of computing is undergoing a significant transformation, driven by the demand for greater efficiency and specialized performance. The move beyond conventional processor designs towards heterogeneous architectures, coupled with innovations in memory and tight hardware-software integration, is paving the way for unprecedented capabilities. These developments are not just incremental improvements but foundational shifts that will redefine how we interact with technology and what computers can achieve in the coming years, impacting everything from personal gadgets to large-scale data centers.